That MIT paper about AI enhancing scientific productivity that everyone has been talking about is fake..

Where were are all the internet peer reviewers?

In November a graduate student in MIT’s economics department published a preprint which has had an outsized impact on recent discourse about AI, science, and economic productivity. The paper appeared to confirm the widespread belief that AI will save us from scientific and technological stagnation.

The paper came up organically in a conversation I had a few days ago. The next day I learned the paper is likely 100% fake. A press release from MIT states that they could not ascertain the provenance for any of the data presented. The graduate student responsible for the paper has been expelled, and the paper has been withdrawn by arXiv for violating arXiv’s code of conduct.

This news is a month old, but somehow I missed it.

I’m afraid the news hasn’t percolated as far as it should. That’s why I want to discuss what happened here, even though this story has already been covered by the Wall Street Journal, Stuart Buck, and Ben Shindel.

What happened?

The paper purported to present findings from a large randomized controlled trial of N=1,018 materials science researchers (!) all located at a single R&D lab at a “US-based firm”.

The top-line finding was that researchers who were given access to an “AI tool” discovered 44% more materials, resulting in a 39% increase in patent filings, and a 17% increase in new prototype materials.

Simply stating these results doesn’t do the paper full justice though — it is 78 pages long and contains a variety of very sophisticated sub-analyses and additional findings. For instance, the paper also claimed that a subset of less experienced scientists didn’t benefit from using the tool, while more experienced scientists were able to capitalize on using the tool.

Toner-Rodgers presented the paper at a National Bureau of Economic Research conference just two days after it was published on arXiv. The paper was covered by the Wall Street Journal, The Atlantic, Nature, and numerous other outlets. Since its publication, the paper has been cited by the European Central Bank and in Congress, and it has been cited dozens of times.

The findings confirmed a widely held belief that AI is the answer to ending scientific and technological stagnation. Additionally, the paper acknowledges help from Daron Acemoglu and David Autor, two big names in economics. In the Wall Street Journal Acemoglu praised the paper, saying it was “fantastic”. Acemoglu’s praise of the paper was particularly meaningful given his broader skepticism about AI’s economic impacts. It’s interesting that both of these researchers, who apparently saw the paper in advance, were not surprised that a first-year graduate student was able to do so much data analysis work on their own (more on that in a bit).

It’s all fake?!

According to the Wall Street Journal, the investigation at MIT began when a “computer scientist with expertise in materials science” started emailing people in the MIT Economics Department and asking questions. Nobody could find answers. Eventually someone asked MIT administrators to investigate.

Here’s what MIT administrators said in their statement:

“While student privacy laws and MIT policy prohibit the disclosure of the outcome of this review, we are writing to inform you that MIT has no confidence in the provenance, reliability or validity of the data and has no confidence in the veracity of the research contained in the paper…”

That’s a pretty strong statement! Daron Acemoglu and David Autor also issued a statement, which reads in part:

“… we want to be clear that we have no confidence in the provenance, reliability or validity of the data and in the veracity of the research.”

Again, wow. I think it’s pretty safe to say the paper is completely fake.

After the news broke, Will Wang on X discovered that Corning has filed a complaint against the author of the paper (Aidan Toner-Rodgers) for registering the domain corningresearch.com. It appears the author may have been interested in creating a fake website to put fake data on.

The skeptics

You can find me expressing a bit of skepticism about the paper the day it was announced:

However, I never imagined the paper was fake. My skepticism was about the generalizability of the findings and implications for AI-driven productivity gains in general. In 2016-2018 I did research on using machine learning to predict the properties of molecular crystals and on using generative models to generate new molecular structures. I’m pretty far behind on the state of the art, but I’ve loosely followed the field over the years.

When I saw the MIT paper, two similar papers came to my mind, both of which garnered significant hype but were actually “nothing burgers”. You might remember these papers blowing up on social media:

The first is a 2019 paper from the startup Insilico Medicine which appeared in Nature Biotechnology. The researchers announced they had used generative AI to discover a new drug molecule, which they then synthesized and tested successfully in mice, all in 46 days. The only issue is that the drug was nearly identical to existing drugs - drugs that had been in the model’s training data. That fact was only revealed later in a couple blog posts that relatively few people read. Experts who looked the new drug molecule said it was utterly uninteresting. In other words, the model was no better than a pharmaceutical chemist sketching candidate molecular structures on a dinner napkin.

The second paper, also from 2019, was a Nature paper that claimed to have invented an “AI” that could “predict in advance the discovery of future materials”. The “AI” (actually just a Word2Vec embedding model) was trained on the literature only up until a particular cutoff year. The embeddings were then used to predict future materials that appeared in the literature after that. However, the materials it “predicted in advance” were actually already listed in the literature as candidate materials. Furthermore, the model was developed and tested on just one narrow set of of materials - thermoelectrics. Thermoelectrics were chosen because they are exceptionally easy — they are described with a standard nomenclature (unlike other domains) and there are a relatively small number of candidate elements one might consider. Many critics pointed out that the methodology was unlikely to translate easily to other material types.

Now I want to talk about two people who voiced skepticism of the MIT paper - Robert Palgrave and Stuart Buck.

Robert Palgrave is a Professor of Inorganic and Materials Chemistry at the University College London. In a Twitter thread written less than a week after the paper came out, he voices a number of concerns. Essentially, he’s pointing out that the findings of the paper were out of line with previous literature on the subject. However, the discussion gets quite technical, and I’m afraid he was largely ignored. Palgrave reminds me of the experts on superconductors who expressed skepticism about LK-99 but were also largely ignored during the LK-99 mania.

Stuart Buck is founder of the Good Science Project, and he ends up looking really good here. Here’s what he said back in December:

After the news broke about the paper, Stuart shared this:

Hindsight is 20-20.

It’s interesting how many red flags there were in this paper that were not commented on right away. This paper got a lot of eyeballs, so if there was ever a chance for internet peer review to prove itself, this was it!

To being with, there’s an obvious question here - why would a company fund a very large trial on AI, get amazingly positive results, and then give all their data to a random first year Ph.D. student at MIT? On top of that, why would they choose to remain anonymous? Why would they not trumpet these findings at every possible venue? Most companies are quick to brag about cool ways they are using AI, to help attract investors and talent. Including big materials companies like Corning:

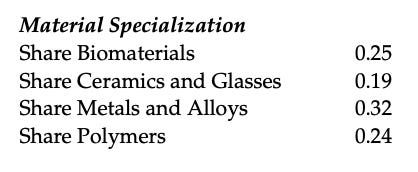

The next red flag was the sheer variety of materials that the AI tool could be applied to:

The structures of these materials at the atomic level are vastly different, and how they are represented is also different. From the literature I have seen, machine learning based approaches developed for materials generation for metals will not be applicable biomaterials without considerable modification. The idea that one monolithic AI tool could work across all these domains is certainly not unimaginable, but it is out of line with the existing literature.

Additionally, Ben Shindel points out that the author uses a sophisticated technique for measuring material similarity. This is surprising for two reasons - first, it’s surprising that a first year economics student would be able to implement it, and secondly, because the method is likely to run into issues with polymers and biomaterials (ChatGPT confirms this). These are the sort of technical issues that only experts (like peer reviewers) might notice (Ben Shindel did his PhD in materials science).

Ben points out some other red flags - check out his article for detail. Stuart Buck provides this observation:

Pointed questions are no longer welcome in science

Stuart Buck’s questioning was not received well:

“I heard from other people last year that it was a breach of etiquette for me even to ask questions about the AI paper, because the undertone or implication might be that the paper could conceivably, possibly, maybe be fraudulent, and that was the worst possible thing you could ever even remotely hint at.

By analogy: when you get a mortgage to buy a house, the bank typically wants documentation about where the down payment came from. They're not accusing *you* of malfeasance per se, but the source of the down payment (e.g., your own savings versus a gift from parents) affects what the bank thinks you can actually repay. If $100,000 showed up in your account last month with no plausible explanation, the bank will want to know more.

That said, I don't want any new bureaucratic requirements for research akin to IRBs. Absolutely not. That would only slow everything down, and probably wouldn't deter actual fraudsters very much anyway.

But cultural change would be nice. It should be much more normalized and routine to ask probing questions about the provenance of data, about how scholars had time to do a particularly demanding project, and so forth.” - Stuart Buck

This is something I’ve heard several times over the years - that asking pointed questions is increasingly not welcome, and that the culture of science is shifting to become less critical. A culture of criticism is foundational to scientific progress, and if we lose that, scientific progress will stagnate, no matter how much funding is provided.

I thought econ (especially among the social sciences) is famously quite a critical discipline where people are happy to tear into a colleague’s work.

I think one way the author probably avoided that kind of critical feedback is by not presenting this paper at conferences and in department work-in-progress sessions. Those are venues where work gets scrutinized and criticized and improved. But given that this was just outright fraud, he probably didn’t go through those community checks, because those would be opportunities to be exposed.

For what it's worth, it feels like ML Twitter is unusually adversarial, with people poking holes in viral papers. In contrast, computational biology Twitter feels less critical, with replies and QTs of papers tending to be congratulatory about the accomplishment, rather than engaging with the claims. It's obviously impossible to say if this has any causal relationship with the fact that ML seems to be progressing more rapidly than computational biology, but it is interesting.