How common is scientific fraud?

More common than we'd like to admit

Recently scientific fraud has been in the news again:

Stanford president Marc Tessier-Lavigne resigned as president after it came to light that at least four of his papers contain fraudulent results (including ones published in Science and Nature). As a result he has resigned as president to return to doing research full time as a tenured faculty in the Stanford Biology Department. (Sadly, no further punishments are forthcoming - he is being “punished” by being told to return to research full time - the very thing that got him in trouble.)

In spring 2023 the group of Ranga Dias published a paper in Nature on a material made of lutetium, hydrogen, and nitrogen that can stay superconducting at room temperature and high pressure. The paper received much fanfare at the time but is now being eyed with much suspicion as Dias recently had a paper retracted for fraud (an investigation by Editors at Physical Review Letters found evidence for data fabrication). This is Dias’s second retraction recently — In November 2022 he had a 2020 superconductivity paper retracted from Nature for “data-processing irregularities”. He also was found to have plagiarized 40% of his own Ph.D. thesis. The fact he is still a Professor at the University of Rochester is a testimony to how little academic institutions care about maintaining the integrity of science.

Much debate is circulating regarding a letter to the editor entitled “The proximal origin of SARS-CoV-2” that was published in Nature Medicine in March 2020. The paper was quite influential and was used over and over as a sort of cudgel against proponents of the lab leak hypothesis. Based on recent document leaks many are now calling it fraudulent. In the conclusion the authors state “we do not believe that any type of laboratory based scenario is plausible.” Yet, Slack and email messages written while the paper was being drafted and while it was under review show that at least two of the authors did think the lab-leak theory was plausible. After they wrote the first draft, lead author Kristian Anderson wrote on Slack that “accidental escape (from a lab) is in fact highly likely - it’s not some fringe theory”. Another author said “lab passage I’m open to and can’t discount”. After some internal debate the authors decided the lab leak theory should be rejected in their paper to prevent (in the words of one of the authors) a “shit show”. They also purposefully left out recent Pangolin data that weakened the case that SARS-CoV-2 could have made the jump to humans via evolution in the wild.

A retracted paper co-authored by famous behavioral economist Dan Ariely is in the news again. The paper claimed that putting an honestly pledge at beginning of a survey made people more truthful. Multiple follow-up studies could not replicate the findings. Then, an investigation in 2020 found that the data they had used was utterly unrealistic. The investigators also found evidence that one dataset had been fabricated by duplicating data from a different part of the paper and adding random numbers to it. In response to these findings the journal retracted the paper in 2021. Records show that Ariely was the last to edit the spreadsheet filled with unrealistic data. Ariely blames the faulty data on an insurance company they were working with. The insurance company would have no motive to fake the data, however, while Ariely did. The paper is in the news again due to an investigation by Data Colada that discovered evidence of fraud in four papers by Francesca Gino. Amazingly, it appears that Gino contributed a separate fraudulent dataset to the same paper referenced above. Data Colada summarizes it thusly: “That’s right: Two different people independently faked data for two different studies in a paper about dishonesty.” Harvard has placed Gino on academic leave, but as of the writing of this piece Ariely remains a faculty at Duke University.

If you asked me 10 years ago how common I thought scientific fraud was I would have said “very rare”. People go into science because they want to discover the truth about the nature of reality and they recognize that science is the best way we have at arriving at that truth. So why would a scientist knowingly fabricate falsehoods and corrupt the integrity of the entire system? The idea is very horrific to contemplate, so I think most of us flinch away from even considering the possibility. Here’s how Stuart Ritchie puts it:

“The very idea (of fraud)… is so abhorrent that many adopt a see-no-evil attitude, overlooking even the most glaring signs of scientific misconduct. Others are in denial…” Stuart Ritchie, in Science Fictions

Over time though I started to become less naive. In grad school I observed people “cleaning” data to make it conform better to what was expected. I read about the replication crisis and how people were using questionable practices like data dredging. I saw how people were more interested in getting publications rather than doing proper rigorous work.

Then I uncovered suspected fraud in a lab I worked in, which was a bit of a wake up call for me. In the past year or two I’ve asked several people if they have encountered fraud and found three people who said they observed evidence of fraud. All three were told to ignore it and two suffered repercussions for trying to expose it. I’ll be writing up some of these stories in a future post.

All-too-human desires for status and money lead many researchers to commit fraud. It’s easy to commit and easy to get away with. As James Heathers pointed out recently on his new Substack, everyone has what police call “motive, means, and opportunity”:

“everyone has:

the motive (publishing lots of science for fame and funding),

the means (manipulating the high-level data and images typically presented in a paper has been trivial since Babbage was complaining about scientific bullshit in the 19th C; manipulating whole data sets is much more challenging),

and the opportunity (submissions are open, and anyone can technically publish anything anywhere)…

…at every point, and anyone pretending they don’t is a goddamn simpleton.”

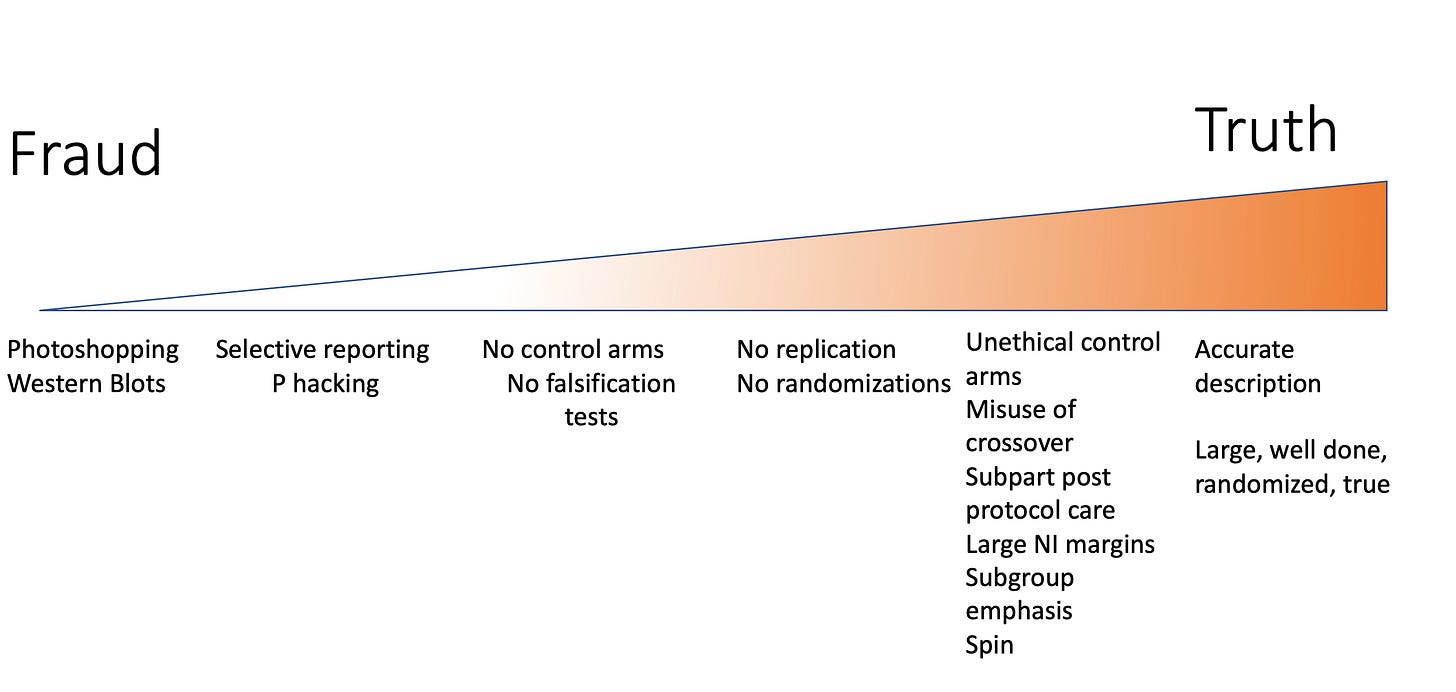

Fraud is a spectrum

Fraud involves some action with the intent to deceive. What that action might be spans a very, very broad spectrum. Some actions deceive directly, others play off the reader’s ignorance. Most people seem to agree that the worst sort of fraud is fabricating data. Then there are many lesser types of fraud such cherry picking what data to present or improper “cleaning” of data.

Finally there is the use of improper methods when the authors know better - things like p hacking, not blinding experimenters, “HARKing” (hypothesizing after the results are known), ignoring possible confounders, and not having a proper control group. It’s common for authors to use shoddy statistical techniques or other questionable research practices and then not fully illuminate the limitations of those practices in their paper or bury the limitations towards the end of their papers. Researchers also engage often in what Spencer Greenberg has dubbed “importance hacking” - vastly overstating what can be concluded on the basis of their study. If there is any inkling of intent to mislead present then importance hacking can be a type of fraud.

Plagiarism may be considered a form of fraud as well - the author is intending to deceive someone into thinking someone else’s words are your own. It’s not surprising that many of the worst scientific fraudsters also have a history of plagiarism.

Intent is also fuzzy, it is not always completely conscious but may be unconscious or semi-conscious:

“A continuous spectrum can be drawn from the major and minor acts of fabrication to self-deception… Fraud, of course, is deliberate and self-deception unwitting, but there is probably a class of behavior in between where the subject’s motives are ambiguous even to himself.” - William Broad and Nicholas Wade, in Betrayers of Truth.

Inappropriate image duplication

Any discussion of fraud prevalence should start with the monumental work of Elizabeth Bik. She visually screened 20,261 papers published in peer-reviewed journals for evidence of improper image duplication and published her findings in a 2016 paper.1 She focused on papers that contained the keyword “western blot”, a type of image common to biomedical research that is very easy to doctor. She found that 3.8% of papers contained inappropriate image duplication.

Of course, Bik could not determine if intent to deceive was present or if the duplicated images represented honest mistakes. In her work she found that 70.6% of the duplicated images contained some sort of alteration (repositioning, rotation, etc) while only 29.4% were simple duplication. As she notes, duplication with alteration involves conscious effort and is thus more likely to be the result of intentional misconduct. Moreover, she noted that researchers who published one image duplication problem were much more likely to have more. This seems to suggest something intentional going on. She also found that the rate of inappropriately duplicated images in the literature jumped from 0.5 - 1% between 1996 - 2003 to 3 - 5% between 2004 - 2014. This may be due to the wider availability of digital editing tools in the later period.

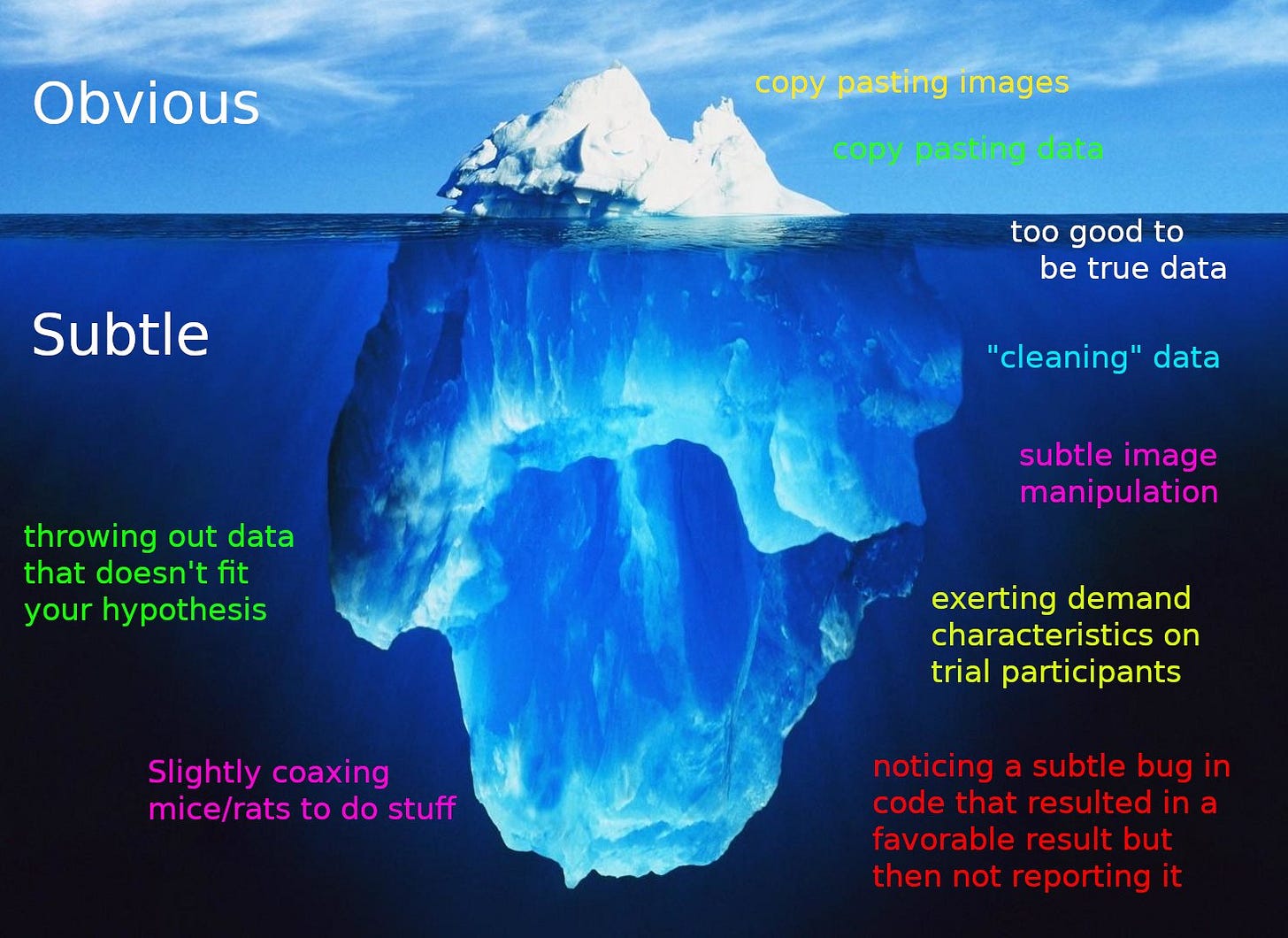

The scary thing about Elizabeth Bik’s work is that she was only looking at a very lazy type of fraud that is relatively easy to detect (ie duplicated images or images that had been rotated, etc) She did not spend the time required to detect photoshopped images. If ~2% of papers contain these simple types of fraud, the overall rate must be considerably higher.

Here’s how I put it on Twitter several months ago — image duplication is likely just the tip of an iceberg:

Surveys

A bunch of studies have tried to ask scientists, anonymously, if they have ever committed fraud. One meta-analysis on this found that 2% have engaged in fabrication or falsification of data.2 While the lizardman constant applies (there’s always some people not seriously filling out the survey) the actual number is likely higher as many people may be loath to admit to fraud even in an anonymous survey, or be in denial about it. When asked if they personally knew a colleague who engaged in this sort of serious fraud, about 15% of respondents said they did, averaged across several surveys. Some surveys suggested that only about 50% of the scientists who observed fraud reported it. The same meta-analysis found that 10% of researchers admitted to engaging in “questionable research practices”.

What about specific practices that are further down the fraud spectrum? (less serious) A survey of 2,155 psychologists found that 22% admitted to rounding a p-value down, 40% had decided to exclude data after looking at its impact on the results, and 60% had engaged in selective reporting of studies that “worked”.3

Rates of fraud seem to vary a lot between fields. Fraud seems to be relatively uncommon in the hard sciences, like physics. This may be due to the more mathematical nature of physics — it’s hard to fake math. Fraud, disturbingly, seems to be higher in the biomedical domain, which is exactly where it can do the most damage. For instance, a study of 527 papers published in the journal Anaesthesia between 2017 - 2020 found that a whopping 14 percent of them contained false data.4

Is the rate of fraud increasing?

It’s hard to say. Rates of retraction have been increasing over time, and many of these retractions are for fraud. [An analysis of 742 papers retracted between 2000-2010 found that 28% were retracted because of fraud.5 A different survey of 4,449 papers between 1928 - 2011 found that 20% of retractions were due to outright fraud, 42% were due to “questionable data/interpretations”, and 47% were due to plagiarism or duplicate publication.] Retractions increasing doesn’t necessarily mean the rate of fraud is increasing however - it could just be we are getting better at detecting it.

Other circumstantial evidence

In psychology many statistical results are reported using the APA style. Researchers developed a program called StatCheck to automatically check p-value calculations.6 They ran the program on thousands of papers from eight major psychology journals. They found that a whopping 50% of papers contained at least one erroneous p-value. Disturbingly they found that significant p-values were more likely to be erroneous, suggesting bias towards reporting insignificant figures as significant. This may be interpreted as indirect evidence of fraud.

Why does this matter?

This piece is already getting long, so I’ll keep this section brief:

Fraudulent papers at the very least waste resources as scientists try to reproduce or build off the fraudulent findings. They also demoralize researchers and erode trust in science. Finally, the fraudulent results themselves can be quite harmful, for instance if they influence medical practice. Even if the papers are retracted, preprints and other copies may continue to circulate, and all of the meta-analyses and reviews compromised by the fraudulent work remain in print.

Fraudulent papers can cause a lot of harm. Here’s two very notable cases:

Vaccines and autism. Andrew Wakefield’s fraudulent paper in The Lancet kicked off the modern anti-vax movement. The paper was eventually retracted for fraud 12 years later. Today millions still believe that childhood vaccines such as the MMR vaccine cause autism, leading to lower vaccination rates and measles outbreaks in New York and elsewhere.

Ivermectin - An analysis of 26 major trials of ivermectin for COVID-19 found that over one third had “serious errors or signs of potential fraud.7 One of the first papers where fraud was discovered was published by Elgazzar et al. on the preprint platform Research Square. Investigators noticed numerous issues - plagiarism, patients who died before the study started, numbers that looked too good to be true. In another ivermectin study, published in Viruses, the fraud was one in the most lackadaisical manner - data was just copied 11 times to create a larger sample (and they put this obvious fraud on Github!). Another case of fraud was discovered by Buzzfeed News after they called the hospital where a study was supposed to have taken place and found there were no records of it ever having been done. Several meta-analyses remain contaminated with fraudulent studies. When the fraudulent studies are removed no effect is found. As has been well-documented elsewhere, these fraudulent works influenced national COVID-10 policy, in particular in Latin America.

Further reading

I recommend Stuart Ritchie’s excellent book Science Fictions, especially Chapter 3, which is all about scientific fraud. He details several shocking cases of scientific fraud and the damage they caused in their respective fields.

The Real Scandal About Ivermectin by James Heathers.

How bad research clouded our understanding of Covid-19 by Kelsey Piper

References

Bik, Elisabeth M., et al. “The Prevalence of Inappropriate Image Duplication in Biomedical Research Publications.” mBio, edited by L. David Sibley, vol. 7, no. 3, July 2016, pp. e00809-16.

Fanelli, Daniele. “How Many Scientists Fabricate and Falsify Research? A Systematic Review and Meta-Analysis of Survey Data.” PLOS ONE, vol. 4, no. 5, May 2009, p. e5738.

John, Leslie K., et al. “Measuring the Prevalence of Questionable Research Practices With Incentives for Truth Telling.” Psychological Science, vol. 23, no. 5, May 2012, pp. 524–32.

Carlisle, J. B. “False Individual Patient Data and Zombie Randomised Controlled Trials Submitted to Anaesthesia.” Anaesthesia, vol. 76, no. 4, Apr. 2021, pp. 472–79.

Steen, R. G. “Retractions in the Scientific Literature: Is the Incidence of Research Fraud Increasing?” Journal of Medical Ethics, vol. 37, no. 4, Apr. 2011, pp. 249–53.

Nuijten, Michèle B., et al. “The Prevalence of Statistical Reporting Errors in Psychology (1985–2013).” Behavior Research Methods, vol. 48, no. 4, Dec. 2016, pp. 1205–26.

Hill, Andrew, et al. “Ivermectin for COVID-19: Addressing Potential Bias and Medical Fraud.” Open Forum Infectious Diseases, vol. 9, no. 2, Feb. 2022, p. ofab645.

Thanks for this disheartening but so well-researched article on this topic. Lots to think about here.