Quantum computing: hype vs reality

Part 1 of a 2 part series.

On December 9th, Google announced a “breakthrough” in quantum computing in a sleek, stylish presentation:

Notice this was not just from Google Quantum, it was from Google Quantum AI. Google was not satisfied with limiting themselves to just one buzzword.

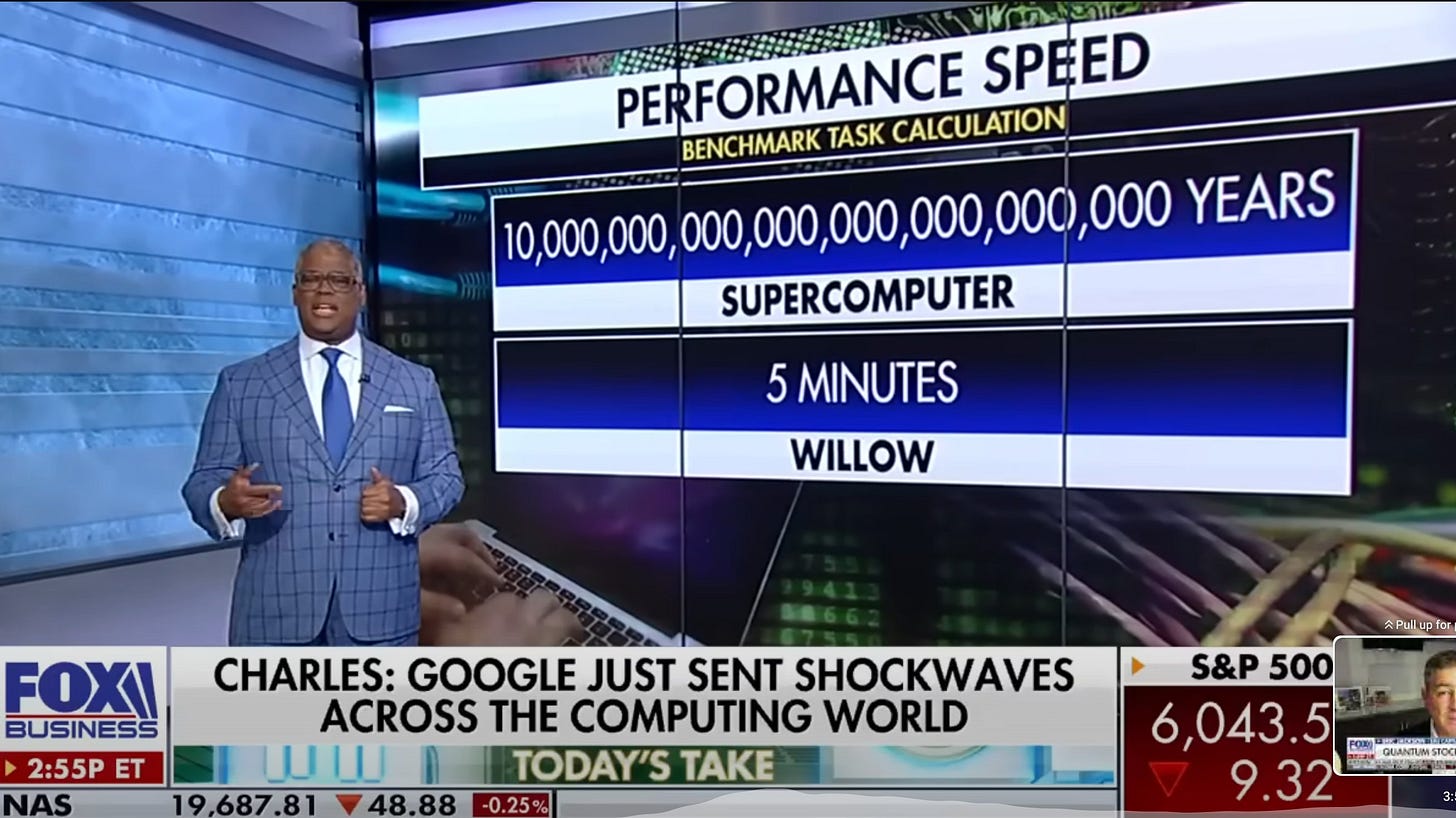

This was a marketing coup. The Google team announced that their chip, named Willow, solved a problem in just five minutes that would take a supercomputer 10,000,000,000,000,000,000,000,000 years. This accomplishment made headlines around the world:

But what was this problem that they solved? It’s a problem that Google invented several years ago called “quantum circuit sampling.” This is not the first time they’ve used this problem to compare their quantum machines to classical computers. Here’s how quantum physicist John Preskill describes it:

“The problem their machine solved with astounding speed was carefully chosen just for the purpose of demonstrating the quantum computer’s superiority. It is not otherwise a problem of much practical interest."

Quantum circuit sampling involves creating a random quantum circuit and then sampling from the output. This is very difficult for classical computers to do, because the number of possible states you have to keep track of to simulate the evolution of a quantum circuit grows exponentially with the number of qubits. It is a problem with zero practical utility. Google’s Willow chip has 105 qubits, which is far beyond what classical computers can simulate. So this accomplishment is not surprising and is not a sign of any major progress.

There was a second aspect to Google’s announcement that had to do with quantum error correction. This advancement is much harder to understand, and received much less attention, although it is more substantial. Quantum circuits are highly prone to noise, and require lots of qubits to be dedicated to error correction. The qubits available for computation are called “algorithmic qubits,” while the rest are error correcting qubits. These error correcting qubits can also have errors themselves. Google showed that the number of error correcting qubits they require does not increase uncontrollably as the system is scaled up in size. This was described as a critical milestone towards building a practical, large-scale quantum computer. That said, the extent to which this development represents a true breakthrough milestone is unclear. Certainly, it appears an important threshold has been passed. To me, what they accomplished is a bit like a getting a car’s engine running. Up until now, the car was being pushed by hand. Now they started the car, but the car is still moving very slow, and it still has to travel many miles.

As a result of the announcement, quantum computing stocks skyrocketed:

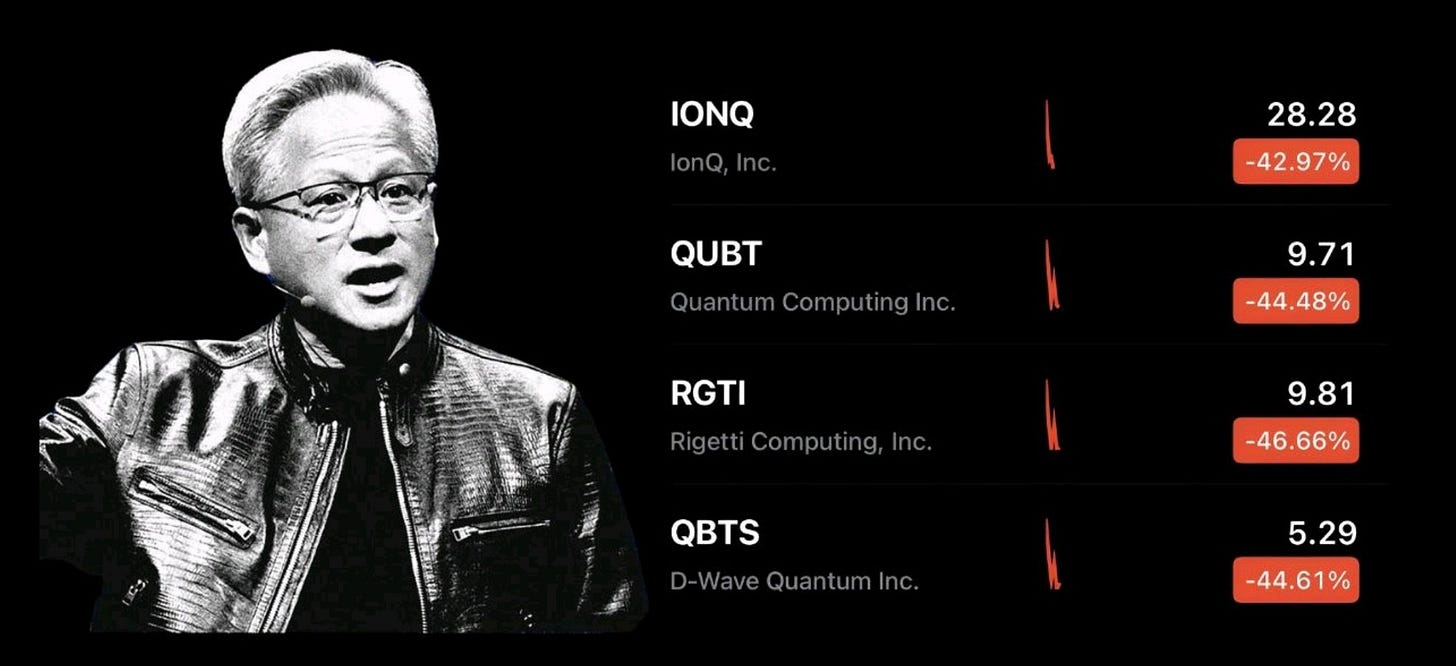

That figure is from December 25th. The stock bubble continued an explosive run-up until January 7th, when NVIDIA CEO Jensen Huang said that quantum computers were “15 to 30 years away.” The quantum bubble popped and the stocks crashed almost 50% in less than a day:

It seems unlikely these stocks are going to recover anytime soon, although another run-up in price is certainly possible in the future.

What are quantum computers?

I’m going go assume readers are already familiar with the basics of quantum computing. However, I’ll review a few basic points. Classical computers are built around manipulating bits, which can only take on two states, “0” or “1”. Quantum computers are based around qubits. A qubit is a two state quantum system, and thus can be in any superposition of two states. Thus, it can be 0, 1, or “any combination” of the two. If we consider a group of qubits, the number of possible states grows as 2^N, that is, exponentially.

With a quantum computer, many computations can run in parallel on these qubits, all in superposition. Some people on social media have been saying that these different computations run in parallel universes, but that is based on a misunderstanding of the many-worlds interpretation of quantum mechanics. If the many-worlds interpretation is true (about 50% of quantum foundations experts subscribe to it), then the process of branching into different universes (“worlds”) occurs when a quantum system interacts with its outside environment through a process called decoherence. For instance, decoherence can occur during interaction with a macroscopic measurement device. Anyway, the point is that when the circuits in the quantum computer are measured (‘looked at’), the superposition is lost. If you are very clever, then you may be able to figure out a way to collapse that superposition so that the desired answer to your computation pops out at the end.

This is where most elementary explanations stop. I’d like to go a bit further, and explain that a qubit can also be in something called a mixture of quantum states. Long story short, the result is that the state of a qubit can be visualized as living within a sphere.

The states on the surface are called “pure states” while the states within the sphere are called “mixed states.” Understanding this isn’t actually important for anything that follows, but this provides an example of how these things are actually more complicated than how they are typically described in popular science articles.

Quantum computers have very few applications

Given the progress that has been made in the last few decades, it seems likely we will get to useful quantum computers some day, barring the world ending or some other catastrophe. However, that doesn’t mean that everyone will have a quantum computer on their desk, or that AI will be run on quantum computers. The idea that quantum computers will replace classical computers is probably the most common misunderstanding about this subject right now. There is a big narrative among amateur investors that quantum computing is an existential threat to companies like NVIDIA. That’s just not the case.

Putting aside optimization algorithms and quantum simulation for a second, there are only three really useful quantum algorithms known:

Shor’s algorithm for factoring (used to break encryption) — invented by Peter Shor at MIT in 1994.

Grover’s algorithm for search — invented by Lov Grover at Bell Labs in 1996.

The quantum Fourier transform — invented by Don Coppersmith at IBM in 1994.

No major algorithms of any note with a proven quantum advantage have been discovered since 1996. This is despite hundreds of physicists and mathematicians trying to discover new algorithms. Algorithmic progress in quantum computing is super hard. Many experts in the field believe the number of computational problems where you can get a quantum advantage is very limited.

When it comes to optimization, the benefits of a quantum computer are hotly contested. Anyone who says that quantum computers are proven better than classical ones when it comes to optimization is either misinformed, confused, or lying. The field has been yo-yoing around on this question for over a decade. There is a lot confusion about the benefit of quantum optimization algorithms, even among experts, and my read of the situation is that the issue is far from settled. Scott Aaronson has been writing about this for a long time, on his blog Shtetl-Optimized. His voice has generally been drowned out by many spouting boosterism and misleading information on the subject.

When Richard Feynman first proposed quantum computers in 1991, he thought their main application would be to simulate quantum systems. The biggest application here will be to help physicists with certain niche simulation problems in physics and materials science that classical computers can’t solve because the systems being simulated involve an enormous number of quantum interactions. For instance, high temperature superconductors are hard to simulate with classical hardware. So, quantum computers could help us find better superconductors. There are also problems in high energy particle physics where a quantum computer could come in helpful. Commercially, quantum simulation might useful for materials discovery, maybe. It is also possible that quantum simulation could be useful for drug discovery. It may be possible to simulate a small drug molecule in isolation with a million qubits. However, to simulate a drug-protein interaction a vastly larger number will be needed (lying somewhere between 100 million to 5 billion qubits total according to an analysis by GPT-4o). Another point is that for drug discovery, perfect quantum simulations are usually not needed or that useful. In fact, right now most drug companies don’t even do classical molecular dynamics simulation, and the few that do use a lot of approximations in their simulations. For quantum simulation (whether for drugs or materials), approximate methods like density functional theory or quantum Monte Carlo are usually good enough. Classical algorithms for approximate quantum simulation have improved dramatically over the past few decades. Recently machine learning has been used to accelerate those algorithms, dramatically lowering computational requirements. Thus, it is highly questionable whether or not future quantum computers will be able to achieve a definitive advantage compared to classical computers for commercial applications requiring simulation.

Long story short, the only major proven use-case for quantum computers is decryption. The two other algorithms listed above have a lot of caveats — the devil is in the details. For instance, with search and the quantum Fourier transform, there is substantial overhead involved in loading the data into the quantum computer and reading the result out. This overhead, which is rarely discussed outside of expert-circles, seriously limits the utility of both algorithms. The development of quantum RAM could help, but that requires its own set of major breakthroughs.

Investing in quantum computing is very risky

In the next post in this two-part series, I review three publicly traded quantum computing companies, and each one is pursuing a different approach. There are three dominant approaches being explored by commercial players - ion traps, superconducting, and optical/photonic. There are also a few less common approaches, like neutral atom quantum computing. There are pros and cons to each approach and no clear winner — that’s why they all exist and are all getting funding. At some point, one of these approaches will likely win out. Right now, nobody knows which one will win, so that is one reason investing in quantum computing companies is risky. Of course, one way reducing this risk in your portfolio is to invest in companies pursuing a variety of approaches and hope that the losses you incur on the losers is compensated by the winner(s).

Right now, the only major application of quantum computers is decryption. Of course, more applications may be developed, but algorithmic progress has been extremely slow. Quantum-resistant encryption algorithms exist today and will be used more and more going forward. By the time quantum computers are able to decrypt stuff, the world may have moved to quantum-resistant encryption years or decades earlier.

According to analyst Martin Shkreli, the NSA’s entire budget is around 11 billion, and the entire decryption business at NSA is probably worth about 1 billion annually. When you run the numbers, that means these stocks are still overvalued, even if any single company could capture a sizable part of NSA’s budget.

All approaches to quantum computing have scaling challenges. There is no clear timeline as to when the >1 million qubits needed for a useful machine will be reached. Of course, these companies all have scaling timelines, but those timelines are pure speculation, based on pure trend-line extrapolation at best.

Let me do the most optimistic trend-line extrapolation I could find. For a quantum computer for decryption, you’d need about 1 million qubits (about 4,000 AQs, with the rest for error correction), and they also need to be significantly entangled. This means you’re going to need a quantum volume between 2^30 to 2^40, according to GPT-4o. According to this source, quantum volumes have been increasing exponentially over time, doubling about every 4 months, roughly. If this was to continue, the target of 2^30 - 2^40 will be reached in 5 - 8 years. That is pretty good! However, I am extremely skeptical about this extrapolation, since the vast majority of tech trends plateau. Drawing an analogy to Moore’s law here is not a good argument. Also, Moore’s law is largely predicated on exponentially increasing investment over time. This requires that the return on investment stays fixed during scaling. There is no good argument that will be the case here, since the number of applications and possible customers is strictly limited, unlike general-purpose computation, for which there is near-infinite market demand.

Recall that the demand for quantum decryption is expected to decrease over time. Right now governments are supporting the development of quantum computers, but at some point they may decide it’s not worth continued investment, and the field could enter a “quantum winter.” That would almost certainly kill all of these startups.

When it comes to startups in particular, they are competing against big players like Google and IBM. Right now, they are ahead. IBM is beating Rigetti on two-qubit gate fidelity and number of qubits. IonQ is competing with Quantinuum, a spinoff from Honeywell, and they are leading in quantum volume (one of the most important metrics) by a large margin. Most startups go bust. In the end there are usually a few big winners. I see no reason why that won’t also be the case here.

Thank you to ChatGPT , Claude, and Quillbot for helping me research and write this, and to Greg Fitzgerald and Ben Ballweg for providing feedback on an earlier draft of this post. I also want to acknowledge Martin Shkreli’s YouTube streams, from which I got a number of insights.

Part 2

Read Part 2 here (published 1/18/25)

In the second part of this two-part series, I analyze three publicly-traded quantum computing startups - IonQ, Rigetti, and Quantum Computing, Inc.. These three companies are taking different approaches, and I explore the physics of each approach.

External links to learn more

Videos:

“Quantum Hype Goes Crazy. But Why?” - physicist Sabine Hossenfelder (Jan 2025)

“Revealing the Truth About Quantum Computing with Scott Aaronson” (Oct 2023)

Articles:

“Above my pay grade: Jensen Huang and the quantum computing stock market crash” - by quantum computing algorithms Professor Scott Aaronson. (2025)

"Quantum computing has a hype problem" - by Sankar Das Sarma, chaired faculty at UMD's prestigious Joint Quantum Institute. (2022)

“Why I Coined the Term ‘Quantum Supremacy’” - by well-known quantum computing expert Prof. John Preskill. (2019)

Scientific articles:

Abbas, Amira, et al. “Challenges and Opportunities in Quantum Optimization.” Nature Reviews Physics, 6 (12), Dec. 2024, pp. 718–35.

Sabine Hossenfelder YT video is incorrectly linked

On the part about computations not running in parallel universes, this is precisely what was suggested in the MIT Press:

"How could such a computer carry out calculations?…Deutsch’s answer is that the calculation is carried out simultaneously on identical computers in each of the parallel universes corresponding to the superpositions. For a three-qbit computer, that means eight superpositions of computer scientists working on the same problem using identical computers to get an answer. It is no surprise that they should “collaborate” in this way, since the experimenters are identical, with identical reasons for tackling the same problem…It is a matter of choice whether you think that is too great a load of metaphysical baggage. But if you do, you will need some other way to explain why quantum computers work."

Of course, the MIT Press didn’t assert this claim with absolute certainty, but it did leave the door open.

I don’t know enough to comment but I think there is a good discussion to be had here. What are we missing?