Why is it so hard to build a quantum computer?

A first-principles look at the extreme engineering challenges

(Part 1: Quantum computing: hype vs. reality)

There are dozens of quantum computing startups,1 but only three that are publicly traded - IonQ, Rigetti Computing, and Quantum Computing Inc. In this post, I analyze the engineering challenges each company faces. These challenges stem from well-understood physics. Four approaches to building a quantum computer are currently dominant,2 and it seems likely one will ultimately win out. Using physics and engineering knowledge we may be able to gain some insight into the commercial viability of each of these approaches.

However, a full analysis of these companies for investment purposes would require looking at their financials, marketing/brand success, current contracts, and the quality of their human capital. I stick to the physics here because it is what I find most interesting and am most knowledgeable about. Nothing in this post is intended as investment advice.

Background info (skip if familiar with qubits, decoherence, gates, etc)

Quantum computers leverage the principles of quantum mechanics to perform computations using qubits, the quantum equivalent of classical bits. Unlike classical bits, which can be either 0 or 1, qubits can exist in a quantum superposition of states.

However, qubits are highly sensitive to their environment, making them prone to decoherence. Decoherence occurs when qubits interact with their environment, altering the qubit's quantum state in a stochastic manner.

Qubits can’t do much on their own. They need to be entangled. Entanglement is a phenomenon where qubits become interconnected, such that the state of one qubit is directly related to the state of another, even over long distances. When entangled, qubits can form gates, similar to the AND, OR, and NOR gates found in classical computers. Some gates are unique to quantum computers, for instance the controlled not (CNOT) gate. A key metric is gate fidelity, which should be above 99.9%. Since qubits are a scarce resource, qubits often need to be re-used during the course of a computation. So one qubit might be used as part of an AND gate and then later used to implement an OR gate. This requires manipulating the qubits with external inputs.

So, the core challenge of building a quantum computer revolves around isolating the qubits from the environment while still being able to manipulate those qubits to 1. load initial data into some qubits, 2. apply initial gates, 3. re-use qubits during the computation by applying different gates as needed, and 4. read the answer out.

IonQ - trapped ion qubits

I think IonQ may be the most promising among the three publicly traded quantum computing startups. The company was formed out of Chris Monroe’s group at the University of Maryland’s Joint Quantum Institute in 2015. They recently announced support from Maryland’s state government to build a “quantum intelligence campus” near the University of Maryland (UMD). They hope to raise one billion to make this campus happen. It’s not entirely clear where that money will come from.

IonQ's quantum computer consists of a linear chain of positively charged ions, held in place by electric and magnetic fields. Historically, these were ytterbium ions (atomic #70), but around 2023 they transitioned to lighter barium ions (atomic #56).

Let’s look at how many ions they have managed to trap as a function of time:

2016: 5

2017: 5-10

2018: 10-11 (?)

2019: 13-20

2020: 32

2021: 32

2022: 32

2023: 32-35 (IonQ Aria)

2024: 40 (IonQ Forte)

Their next target is 64-65 ions, in their Tempo system. However, it’s doubtful the number of ions can keep increasing like this for much longer — we’ll get to why a bit later. First, I want to explain that the number of ions is not the same as the number of usable qubits in each system. Some qubits are needed for error correction, which yields a smaller number of algorithmic qubits (AQs). Additionally, in IonQ’s Forte system, one of the ions is used for optical alignment purposes rather than computation. One has to read IonQ’s marketing materials closely. IonQ Forte, which has 40 ions, was said to have a “capacity of up to 35 qubits.” This doesn’t necessarily mean that they have actually realized 35 AQs yet; it’s just a theoretical maximum which may or may not have been realized. The actual number of AQs depends on system noise. IonQ’s 32-35 ion Aria system achieved 20 AQs in 2022. Currently they can get 25 AQs with their Aria system, and this is what they make available in their cloud service. Some of IonQ’s current marketing materials say that Forte, with 40 ions, yields 36 AQs, one higher than the capacity they stated in January 2024. According to Quantinuum, another privately held company that builds trapped ion quantum computers, the number of AQs IonQ had achieved with Forte in March 2024 was only around nine, despite them advertising it has having 32 at the time. Another more recent article I saw suggested that Forte only has twelve AQs. If the number of AQs achieved so far with Forte’s longer ion chain is currently less than the number achieved with Aria’s smaller chain, that is a red flag in my book (if you know more about this, comment below).

As you may have guessed by this point, creating these traps is not easy. To be more precise, trapping the ions is pretty easy, but getting them to entangle and do quantum computation is not. Lasers need to be used to cool each ion to millikelvin temperatures. Then, precisely manipulated EM fields need to be used to move the ions close to each other and entangle them. Laser pulses have to be aimed at each ion to manipulate their energy levels. The magnetic field in the trap has to be kept extremely uniform, as any slight deviation can ruin the quantum state. Vibrational states of the ion chain are utilized in quantum computation, so naturally these systems are very sensitive to vibration. I have heard that a truck rumbling by on the street can disturb these systems. Setting up and calibrating a single ion trap chip can take six months. Still, it has been done, and since only the ions need to be kept cold, no large-scale cooling apparatus is needed (the rest of the chip can operate at room temperature). As we mentioned, noise is a problem here. Some ion trap devices that have been built are referred to as "noisy intermediate-scale quantum" (NISQ) devices. The number of algorithms that can be run on NISQ devices is greatly reduced. Still, the noise issues here arguably are not as great as other approaches. Ion trap quantum computers can exhibit extremely long coherence times, reaching as high as seconds or minutes.

The downside of ion traps, however, is slow gate operation. Roughly speaking, the "clockrate" of these quantum computers is greatly reduced, because some gate operations require that ions be physically moved around. On a hypothetical 20-million-qubit superconducting quantum computer, it was estimated in 2019 that factoring a 2048-bit integer to break RSA encryption would take about eight hours. On an ion trap quantum computer, by contrast, this would be 1,000 times slower — it would take 8,000 hours, or 333 days. Currently, superconducting approaches remain competitive with ion traps, so this may concern investors.

All quantum computing approaches have serious issues when it comes to scaling to the millions of qubits that are needed for any practical application. For decades, physicists have debated the scaling prospects of ion traps vs. superconducting qubits vs. more exotic approaches. When I was in the field in 2012, physicists were much more pessimistic about the scaling prospects of ion traps. I’m not really aware of the current state of the discourse, but the scaling challenges of ion traps appear to remain very substantial.

As the chain of ions gets longer, it becomes harder and harder to control. The number of quantum vibrational modes, called phonons, increases with the number of ions. As more ions are added, limiting unwanted phonons becomes harder, because the lowest energy required needed to excite a mode decreases. This is very fundamental to the physics involved, and there is no way to get around it. Recall that the longest chain IonQ has created has 40 ions. IonQ’s competitor, Quantinuum, which is ahead of IonQ in many respects, has a device with 56 qubits, which requires at least 56 ions. Fortunately, the number of control electrodes needed grows only linearly with the number of ions. There is no upper bound for how long of a chain can be created, but overall the engineering challenges grow non-linearly as the size of the chain grows.

Since these ions are positively charged, they want to fly apart. As more and more ions are added onto the ends of a 1D chain, they have to be spaced further and further apart to hold them in place. If you look at pictures in Rigetti’s marketing materials (like the picture above), you’ll see that the ions near the center are spaced closer together, while the ions near the edge of the chain have to be spaced further apart. Roughly speaking, the required length of an ion chain scales as N^(4/3). Researchers are also looking at if it is possible to trap the ions in a 2D grid, rather than a 1D chain. The area required for a 2D grid scales as N^(2/3), rather than N^(1/2). It should be clarified here that 2D grids remain a complete fantasy — they have not been demonstrated yet.

According to both Claude and GPT-4o, it is unlikely we will ever have 1D ion traps with more than 100 ions, or 2D grids with more than 10,000. To get around these limitations, IonQ has proposed that two qubits living in separate traps may be entangled via a quantum photonic interconnect. As of October 2024, this has not yet been demonstrated. IonQ’s roadmap says they will achieve this in 2025. As to how feasible this is, the details here are quite complex, and I don’t have time to fully dig into this for this cursory review, so I can’t really say. However, my intuition tells me this will be extremely challenging for them. As I discuss later, quantum-grade photonic devices haven’t been miniaturized yet. Furthermore, my guess is that linking one qubit from one chip to another will not be enough - you’ll have to link multiple qubits, or even the majority of qubits. However, a robust link is achieved, that could make ion traps a clear front-runner vs. other approaches.

Rigetti Computing - superconducting qubits

Rigetti computing was formed in 2013 by IBM physicist Chad Rigetti and is headquartered in sunny Berkeley, California. Like IBM, Rigetti is pursuing a superconducting approach. Superconducting qubits come in many forms, but researchers have settled on “transmon qubits” as the most promising approach, and that is what both IBM and Rigetti use now. In superconductors, electrons bunch up into Cooper pairs and this enables them to travel through a material’s crystal lattice without encountering any resistance. The movement of electrons (current flow) through a superconductor can be described by Schrödinger’s equation. So, a superconductor is characterized by a well-defined macroscopic wavefunction, something which is rare in physics and requires very cold temperatures.

If two superconductors are separated by a thin insulating barrier, the Cooper pairs can tunnel through the barrier, even though classically they would not have enough energy to do so. Now, the phase of the macroscopic wavefunctions in the two different superconductors is going to be different. As a result, the current of Cooper pairs crossing the barrier oscillates as a function of time, even if the electric potential applied to the system is static and not changing. This phenomenon is known as the Josephson effect and the system as a whole is called a Josephson junction. Remarkably, the oscillating current created by the Josephson effect does not dissipate energy. Now, if a “shunting” capacitor is wired to either side of the junction, you end up with a quantum mechanical circuit which is known as a “transmission-line shunted plasma oscillation” or transmon. Since it is quantum mechanical, the oscillations in this circuit have discrete energy levels. If one applies microwave frequency AC pulses, the energy of the transmon’s oscillation can be increased. Due to the nonlinear properties of the Josephson effect, the transmon’s energy levels are unevenly spaced, which is a very useful property for control purposes. The uneven spacing of the transmon’s energy levels means it is hard to accidentally excite the transmon to a much higher energy level than the target energy level. In quantum computing, each of these transmon circuits constitutes one qubit. Transmon qubits are entangled using either capacitive coupling, microwave resonators, or a variety of other approaches.

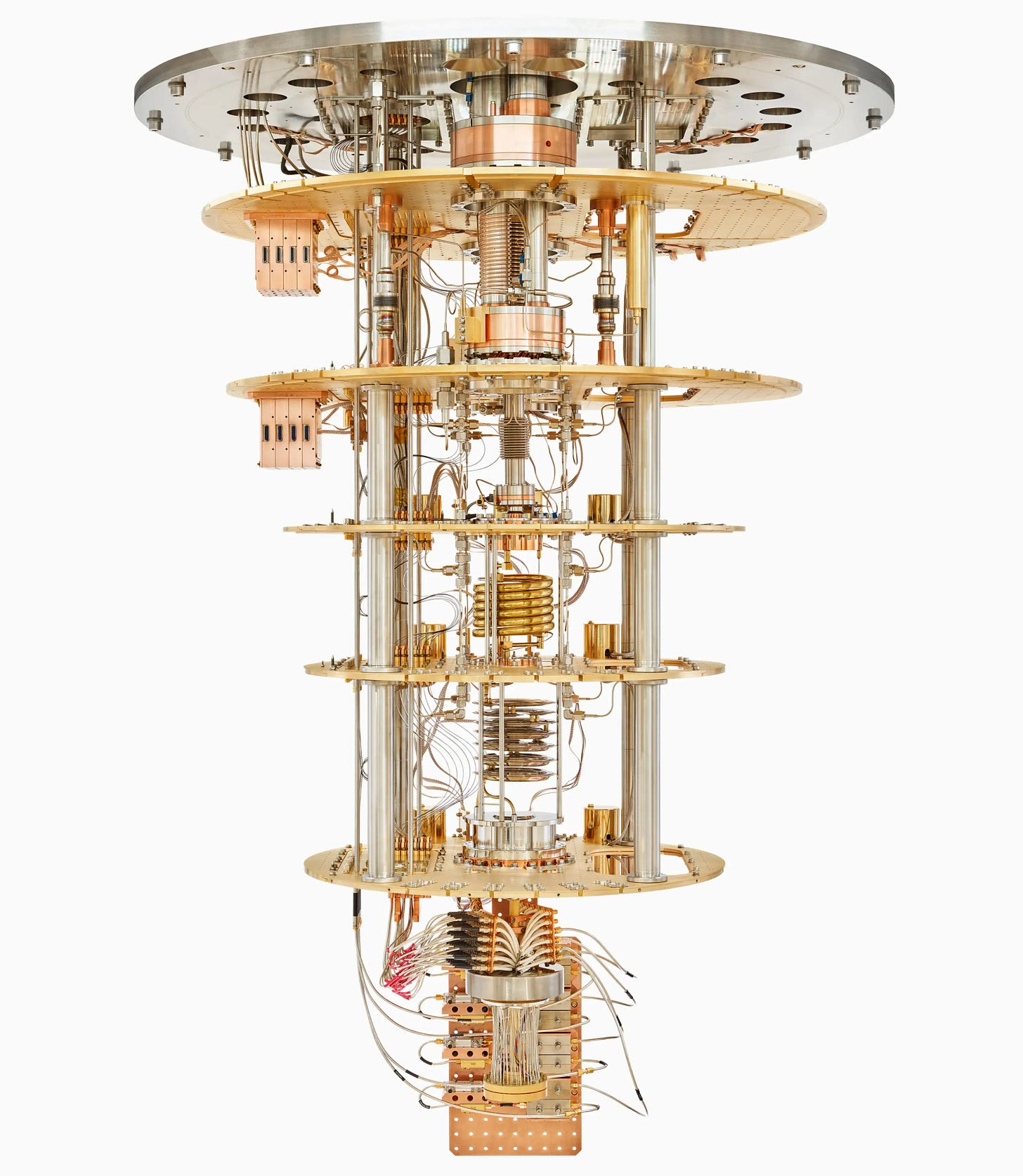

Two different superconducting materials are used in these quantum computers — the qubits are made with aluminum wires, and the superconducting wiring connecting them is made with niobium. Aluminum needs to be cooled to 1.2 Kelvin to become superconducting, while niobium requires a temperature of 9.2 Kelvin. However, to reduce thermal energy below the transmon’s level spacing and to avoid rapid decoherence, these circuits must be cooled much colder, to 0.02 Kelvin (2 milliKelvin). This requirement of ultra-cold temperatures, which is strictly required by the physics involved, is one of the main challenges for this approach. Large and complex cooling systems known as dilution refrigerators are required. Oxford Instruments’ Nanoscience division manufactures these refrigerators for Rigetti.

Similarly to the ion traps we discussed in the previous section, superconducting quantum systems are very delicate and are sensitive to vibrations and stray EM fields. (Both can cause problems, but the main issue here is stray EM fields, not vibration.) So, the dilution refrigerator must be suspended from a cage and isolated from the external environment:

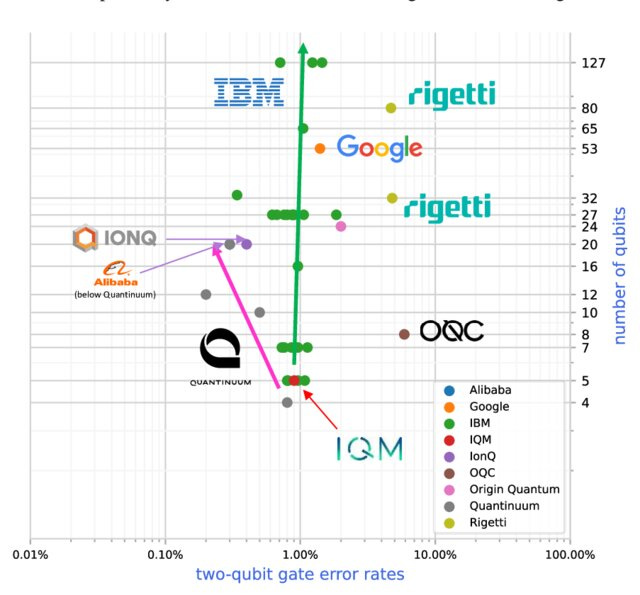

Rigetti’s latest “Ankaa” system has 84 qubits and has gate times between 56 and 72 nanoseconds, with gate fidelities between 99.0% and 99.5%. Rigetti’s gates are significantly faster than IBM’s, but they have lower fidelity. IBM’s systems also have more qubits. This graphic compiled in March 2023 by Olivier Ezratty summarizes how things stood back then. At that time, both IBM’s and Google’s systems were clearly superior. According to commentaries online, this is still the case.

Quantum Computing, Inc. - photonic qubits

Quantum Computing Inc. has a rather amusing history. For brevity, I’ll refer to them by their stock ticker, QUBT. QUBT was initially incorporated in Nevada on July 25, 2001, as Ticketcart, Inc., a company focusing on online ink-jet cartridge sales. In 2007, they acquired Innovative Beverage Group, Inc., and subsequently they changed their name to Innovative Beverage Group Holdings, Inc (IBGH). This company went public in 2008. IBGH ceased operations and went into receivership in 2017 - 2018. In February 2018 it became QUBT. This transformation involved the shell of a failed company being used to create a publicly traded quantum computing company without having to go through the traditional Initial Public Offering (IPO) procedures. This process looks very suspicious, but is actually not that unusual nowadays, and so is not a major cause for concern in itself. Before getting to the physics, there are a few points about this company which I feel I must mention, because they should raise alarm bells for any investor. Firstly, their recent NASA contract was only worth $26,000. Secondly, the company claims to have a “fabrication foundry” in Arizona for building “nonlinear optical devices based on periodically poled lithium niobate.” An investigation by Iceberg Research has cast a lot of doubts on legitimacy of this claim.

Anyway, QUBT has a completely different approach compared to the other two companies discussed so far. Their approach is based on the control and manipulation of photons, a field called photonics. The precise details on how their system works are murky, and the materials they have published are hard to understand, but I can talk in general terms about what they are trying to do.

Qubits can be realized with photons in a couple different ways. Recall that a qubit is a two state quantum-mechanical system. In a “polarization qubit,” a photon is put into a superposition of horizontal or vertical polarization. In a “photonic path qubit,” a photon is put into a superposition over two different paths. There are also some more esoteric approaches. It’s not clear which of these approaches QUBT is using. I asked GPT-4o for help on this question, and after searching the web it said that the precise nature of their qubits has not been disclosed.

Photonic quantum computers can run at room temperature. They may also suffer less from decoherence. Two photons flying through empty space can pass right through each other without interacting (except at extremely high energies). This sounds wonderful. However, difficulties are encountered when it comes to trying to control the state of these photons and entangle them together using optical devices. The interaction of the photons with optical devices (like crystals, glass, etc) can cause their quantum state to decohere.

In quantum mechanics, measurement is usually defined as a process which completely collapses a wavefunction, destroying any quantum superposition. So normally in quantum computation, measurement is only performed at the very end of the computation, to extract the result. However, it is possible to do weak measurements which only partially collapse a wavefunction or barely perturb it at all.

By doing repeated weak measurements, a wavefunction can be “frozen” and prevented from evolving in unwanted directions. This is called the quantum Zeno effect. QUBT claims to be using this effect to counter-balance decoherence. Because decoherence is usually a highly random process, I’m a little skeptical about this claim.

It’s worth mentioning in passing that, while weak measurement is an actual thing, it is poorly understood. I did some research on this topic as part of a class project in 2011. At that time, the subject was controversial, and some physicists I talked to argued that the concept itself was confused and/or that the purported phenomena were not real. It looks like the phenomena of weak measurement and the quantum Zeno effect are both more established and accepted now, but I think there is still ongoing controversy over how they should be understood.

Even if decoherence is brought under control in a photonic quantum computer, photons may be absorbed when moving through optical devices. Obviously this is not good. Random loss of photons in these quantum computers is practically unavoidable, but it can be dealt with. To do so you need an enormous overhead of error correcting qubits.

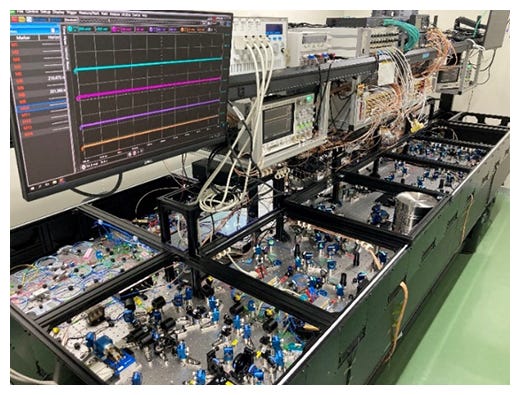

Finally, let’s discuss the scaling prospects for photonic quantum computers. Here’s what they look like currently:

What you are at looking here is numerous lasers, beam splitters, polarizers, mirrors, and other optical devices sitting on an optical table, which takes up most of a room. As with other quantum computers, photonic quantum computers are extremely sensitive to vibrations, so the optical table floats on compressed air to dampen vibrations. They don’t say how many qubits the system in the picture has, but my guess is it’s likely twenty qubits at most, yielding just a handful of AQs. However, let’s be generous and assume you could 100 physical qubits from an optical table like this. Recall that for a useful quantum computer you need at least 1,000,000 AQs. Let’s assume the ratio of error correcting qubits to AQs is 100,000 to 1 (it could be as high as 250,000:1, but we’ll be generous here). Then you would need 1,000,000*100,000/100 = 1,000,000,000 optical tables. That’s a lot! However, the situation is actually worse than this. We assumed the number of optical devices required grows linearly with the number of algorithmic qubits. However, because you need a high density of entanglement between qubits for them to be useful, the number of devices required grows super-linearly. According to GPT-4o, the number of optical devices required for a useful photonic quantum computer grows quadratically with the number of qubits (roughly as N^2). So, you would need something like 1,000,000,000^2 or 1,000,000,000,000,000,000 optical tables worth of optical devices to get 1,000,000 AQs. Of course, further advances in quantum error correction could bring this number down somewhat, but even if we were able to get rid of quantum error correction completely, we’d still be looking at (1,000,000/100)^2 = 100,000,000 tables of equipment. (GPT-4o thinks this analysis is right, however, if you see a mistake please comment below).

“OK,” you might say, “but surely all this will be miniaturized, just like we learned how to miniaturize circuits, radios, lasers, etc.” Consider this DGX superpod, which contains 256 H100 GPUs and can fit in a small datacenter:

Each GPU has 80 billion transistors. So there are at least 20.48 trillion transistors here (20,480,000,000,000). Which is indeed a very large number of electrical devices in a small space.

With silicon technology, the density of components has been increasing exponentially over time — that’s Moore’s law in action. However, comparing these optical benches to silicon-based electronics is like comparing apples to oranges. The harsh reality is that we have no idea how to miniaturize most of the optical devices that are needed. Photonic quantum computers require ultra-high end devices, at the very edge of our engineering abilities. These devices come from a specialized vendors that have already spent decades refining their design. Each laser need to be super high precision, with zero frequency or mode drift, and capable of yielding individual photons on demand with near-perfect reliability. The mirrors have to be perfectly planar with near-zero absorption. The electro-optical modulators, optical fibers, and beam splitters also need to have extremely low absorption and scattering cross sections. Then, all of the devices have to be placed and aligned with exquisite precision. As any graduate student in photonic quantum computing can attest, aligning and calibrating one of these tables can take weeks or months. So, while in theory a lot of this could be miniaturized, it seems to me we are very far from that. Maybe superintelligent AI could teach us how to do it.

Comparing metrics for ion traps, superconducting, and photonic

Ratio of error correcting qubits to algorithmic qubits

This ratio is not fixed with the number of AQs — the number of error-correcting qubits needed scales super-linearly. The precise ratio needed also varies depending on the algorithm being run. I did a bunch of Google searching and talked with GPT-4o about this for a while, and here are some rough ranges for the ratio that would likely be needed for a useful quantum computer:

Trapped-ion quantum computer: 10 - 1,000

Superconducting quantum computer: 400 - 10,000

Photonic quantum computer: 1,000 - 500,000

Best two-qubit gate fidelities so far

Scott Aaronson says this is the most important metric. Scott says that 99.9% is the lower threshold to get to a useful machine. Part of the excitement around quantum is that several companies are hovering right around this threshold, or have surpassed it in some experiments. All of these numbers are from Wikipedia, which seems to be one of the most up-to-date references online.

Trapped-ion quantum computer: 99.87 (Quantinuum), 99.3 (IonQ)

Superconducting quantum computer: 99.897 (IBM), 99.67 (Google), 94.7 (Rigetti)

Photonic quantum computer: 93.8 (Quandela)

Best quantum volume so far

Quantum volume is a far superior metric compared to “number of qubits”, because it takes into account the degree of entanglement of the qubits.

Trapped-ion quantum computer: ~2^20 (Quantinuum)

Superconducting quantum computer: 2^9 (IBM)

Photonic quantum computer: ? (probably < 2^9)

Isn’t there a “Moore’s law” for quantum computers?

A lot of people think there is a "Moore's law" for quantum computing. For instance, some people think the number of qubits is increasing exponentially over time and will continue to do so. However, for Moore's law to be realized for CPUs, exponentially increasing levels of investment were required to maintain progress (I wrote about this in 2015). That only happened because CPUs are general purpose, with new applications opening up over time. Because new applications become available with more and more compute, the demand for general purpose compute is essentially infinite. Quantum computers are not general purpose — they only have one major proven application (decryption), plus a few highly questionable niche applications. Over the last decade, large governments and industry have invested millions into quantum computing. If there is little or no fruit soon, that funding could easily dry up, similar to how it did during the "AI winter" in the 1980s.

But what about D-Wave?

D-Wave Quantum Systems, Inc. was formed in 1999. It is named after d-wave superconductors. While they call themselves “The Quantum Computing Company” (TM), they don’t actually make quantum computers. They make devices called quantum annealers, which they claim can help speed up optimization problems like optimizing neural nets, etc. There is no proof of any benefit from their devices vs. traditional computing hardware. Despite their devices having no practical utility, they are extremely adept at marketing and tricking people into buying their devices. This marketing is misleading at best and fraudulent at worst. A decade or so ago, D-Wave marketed their machines as having hundreds or thousands of qubits. While this was technically true, their qubits were extremely noisy and completely unusable. Recently, D-Wave’s CEO went on CNBC and made a number of completely false statements about how customers were using their machines. Over the years, many organizations have buy devices from them, including NASA, the US Air Force, Lockheed Martin, and Google. Organizations buy from D-Wave out of fear of “falling behind the curve,” not because their devices have any practical utility. While there is much more I could say about D-Wave, I wanted to limit this article to actual quantum computing companies.

Thank you to ChatGPT, Claude, and Quillbot for helping me research and write this, and to Greg Fitzgerald and Ben Ballweg for providing feedback on an earlier draft of this post.

Here’s a list of quantum computing startups that are private - Quantinuum, PsiQuantum, Xanadu, Quantum Machines, QuanaSys, Multiverse Computing, Oxford Quantum Circuits, 1QBit, PASQAL, Terra Quantum AG, Diraq, Universal Quantum, Infleqtion, Atom Computing, Quandela, Agnostiq, Alice&Bob, AEGIQ, Aliro Quantum, Alpine Quantum Technologies, AmberFlux, Anyon Systems Inc., Bleximo, Classiq, ColdQuanta, eleQtron, Elyah, QuAIL, Horizon Quantum Computing, IQM Quantum Computers, Kipu Quantum, Oxford Ionics, ParityQC, Phasecraft, Q.ANT, Qblox, QC Ware, Qedma, Qilimanjaro Quantum Tech, QpiAI, Quantum Benchmark, Quantum Brilliance, Quantum Circuits, Inc., QuEra Computing, Riverlane, Seeqc, Silicon Quantum Computing, Xanadu Quantum Technologies, QuiX Quantum. (That’s 49 startups! There are also several major big companies working in this space like IBM, Microsoft, Intel, Google, Booze Allen Hamilton, Lockheed Martin, and Hitachi).

Trapped ions, superconducting qubits, photonic qubits, and neutral atoms.

Thanks for a thorough introduction to the world of quantum computing and the challenges that must be faced (which appear to be many) before they become commercially viable.